In my scenario, I get 1000 JSON files per day, which must be analyzed with Power BI. So, I will get approx. 380 million rows per year. The system has an initial load of circa. 500 Mio. rows. So, I think a data model is mandatory to perform analysis and a live watch of the data. To do this, I need some dimensions which must be connected to the fact data.

I push the data via a C# script, at this point, many thanks to a very good colleague – Stephan Tomke – for providing me this awesome program, to a Azure Event Hub and staging the data inside a SQL database. In this table, I don’t have aby relationships to the dimensions. To perform this, it is not a option to do this with SSIS, ADF or some other ETL tools, because the business needs real time data.

To do a real time processing, I decided to add a table trigger inside the SQL database to get the needed identifiers from the dimensions:

alter TRIGGER TR_Audit_Employees

ON dbo.eh

FOR INSERT

AS

INSERT INTO dbo.eh2

(

Id,

TotalNumber,

SuccessfulUploads,

FailedUploads,

ProcessDate,

UploadTypeId,

UploadStatus,

ElapsedTime,

UploadSize,

ErrorCode,

ErrorMessage

)

select Id,

TotalNumber,

SuccessfulUploads,

FailedUploads,

ProcessDate,

(

select id from dbo.uploadtype x where d.uploadtype = x.uploadtype

),

d.UploadStatus,

ElapsedTime,

UploadSize,

ErrorCode,

ErrorMessage

FROM inserted D

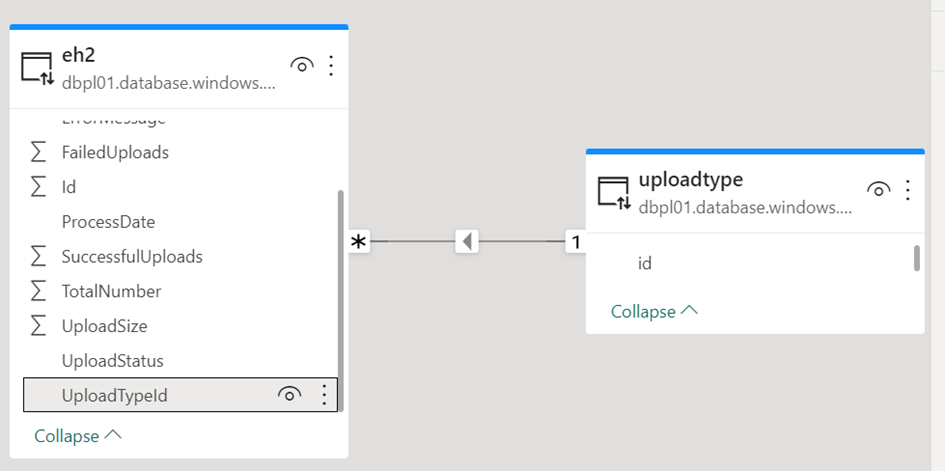

Now, I can connect to the tables and built up an efficient model:

If you add an automatic page refresh or using a change detection measure, you can provide a near real time analysis.