I extended the PowerShell script a bit so that it now submits a more complex JSON payload, and I also ensured that the script runs reliably every 10 seconds.

https://www.flip-design.de/?p=1495

while ($true) {

[Reflection.Assembly]::LoadWithPartialName(„System.Web“)| out-null

$URI=““

$Access_Policy_Name=“RootManageSharedAccessKey“

$Access_Policy_Key=““

#Token expires now+300

$Expires=([DateTimeOffset]::Now.ToUnixTimeSeconds())+1800

$SignatureString=[System.Web.HttpUtility]::UrlEncode($URI)+ „`n“ + [string]$Expires

$HMAC = New-Object System.Security.Cryptography.HMACSHA256

$HMAC.key = [Text.Encoding]::ASCII.GetBytes($Access_Policy_Key)

$Signature = $HMAC.ComputeHash([Text.Encoding]::ASCII.GetBytes($SignatureString))

$Signature = [Convert]::ToBase64String($Signature)

$SASToken = „SharedAccessSignature sr=“ + [System.Web.HttpUtility]::UrlEncode($URI) + „&sig=“ + [System.Web.HttpUtility]::UrlEncode($Signature) + „&se=“ + $Expires + „&skn=“ + $Access_Policy_Name

$profiles = @(

@{

id = „Machine01“

location = @{

city = „München“

zip = „80331“

lat = 48.13743

lon = 11.57549

}

},

@{

id = „Machine02“

location = @{

city = „Hamburg“

zip = „20095“

lat = 53.55034

lon = 10.00065

}

},

@{

id = „Machine03“

location = @{

city = „Berlin“

zip = „10117“

lat = 52.52001

lon = 13.40495

}

}

)

$counterFile = Join-Path $PSScriptRoot ‚counter.txt‘

if (Test-Path $counterFile) {

$counter = [int](Get-Content -LiteralPath $counterFile)

} else {

$counter = 0

}

$idx = $counter % $profiles.Count

$profile = $profiles[$idx]

$payload = @{

machine = @{

id = $profile.id

temperature = Get-Random -Minimum 60 -Maximum 80 # anpassen falls nötig

}

location = $profile.location

}

$next = ($counter + 1) % $profiles.Count

Set-Content -LiteralPath $counterFile -Value $next

$json = $payload | ConvertTo-Json -Compress

$endpoint = „https://$URI/messages?timeout=60&api-version=2014-01“

$headers = @{

„Authorization“ = $SASToken

„Content-Type“ = „application/json; charset=utf-8“

}

Invoke-RestMethod -Uri $endpoint -Method Post -Headers $headers -Body $json

Start-Sleep -Seconds 1

}

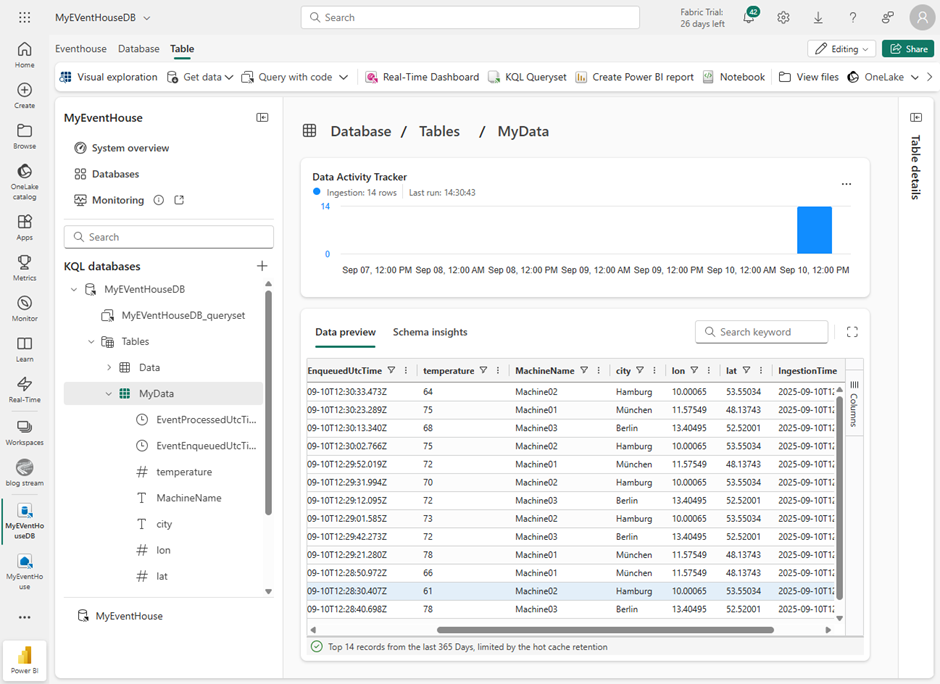

I believe that in a productive environment during development it makes sense to transmit a similar or even identical JSON structure. This way, the metadata can be leveraged to automatically create the table within the Kusto database, as well as to define all other ETL steps within the dataflow in Fabric Real-Time Intelligence.

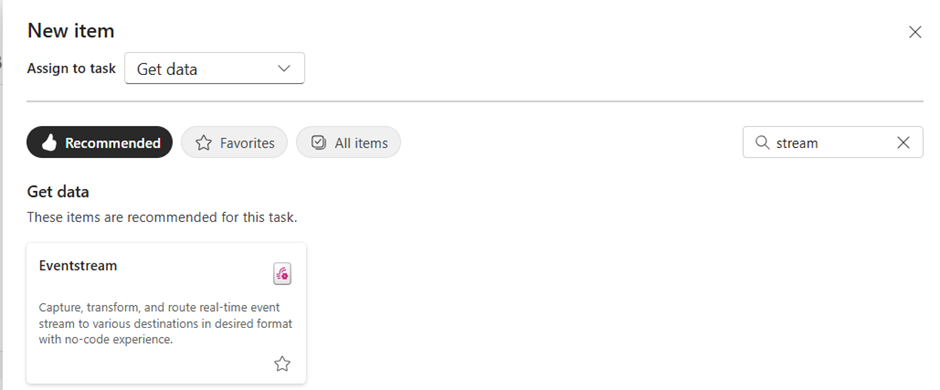

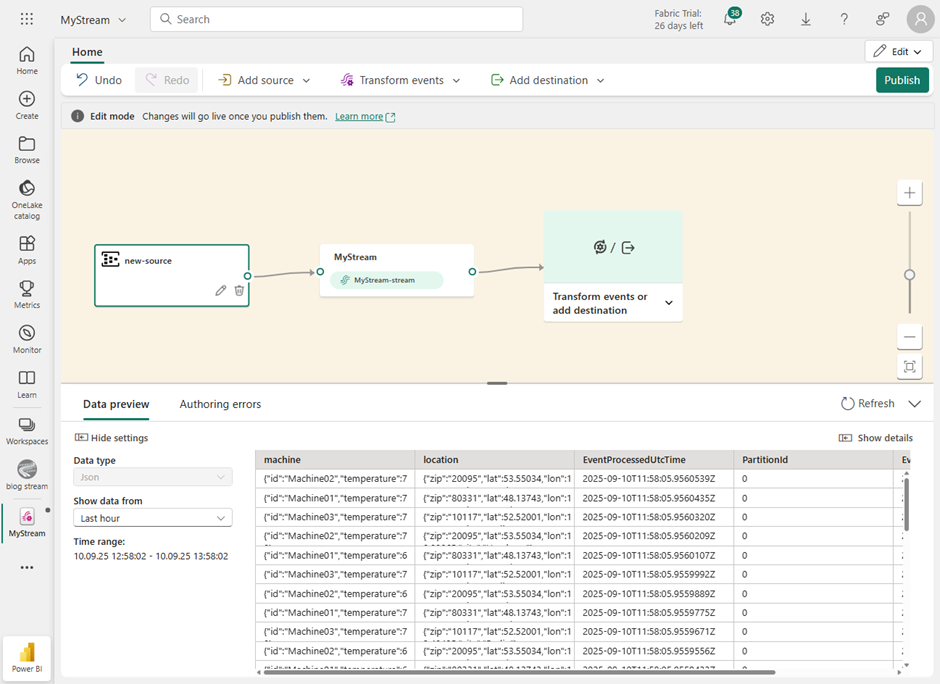

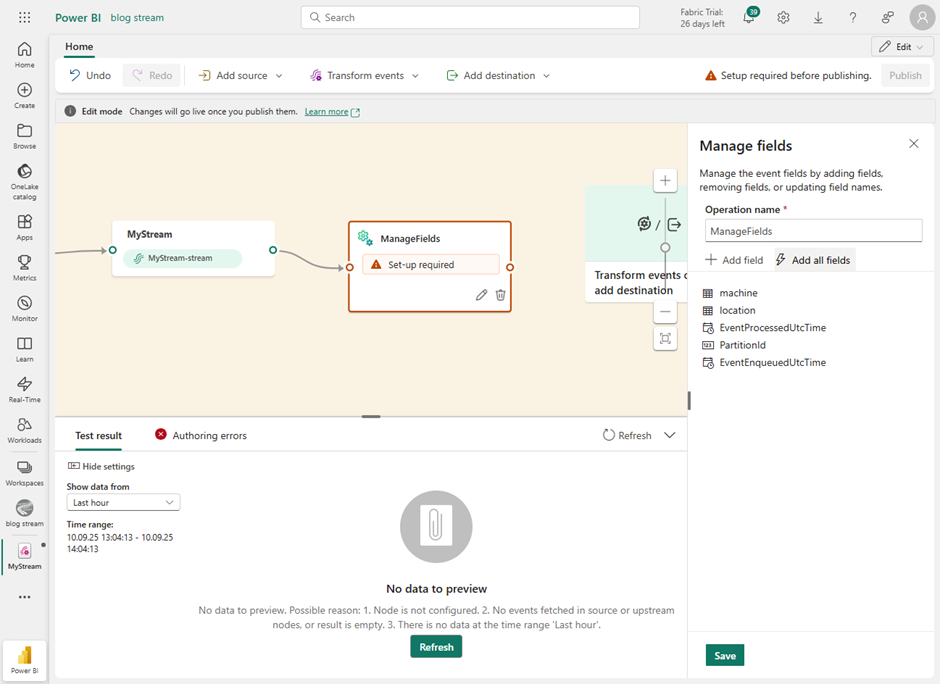

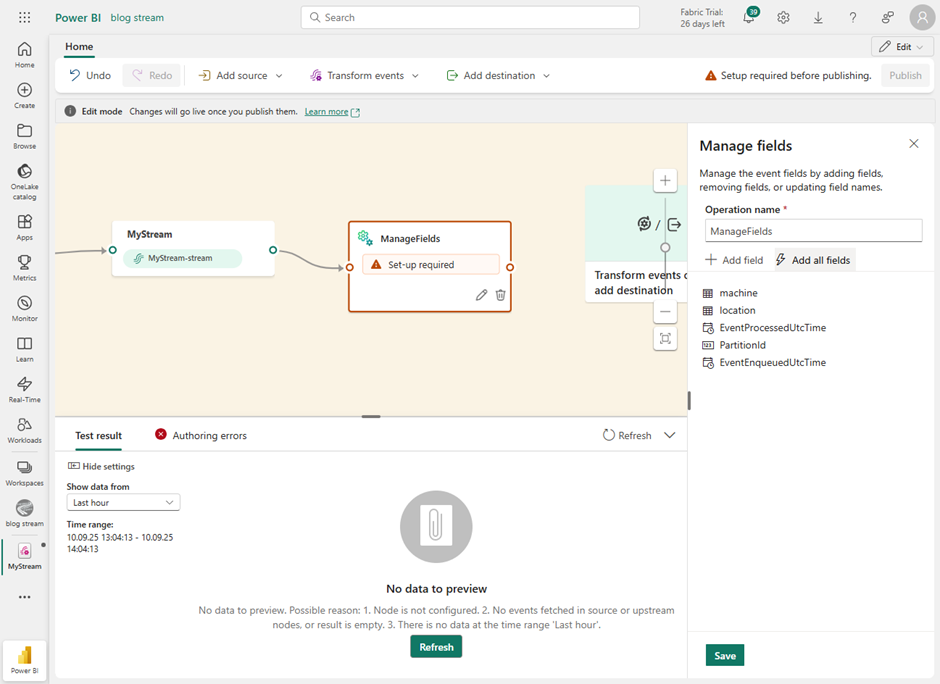

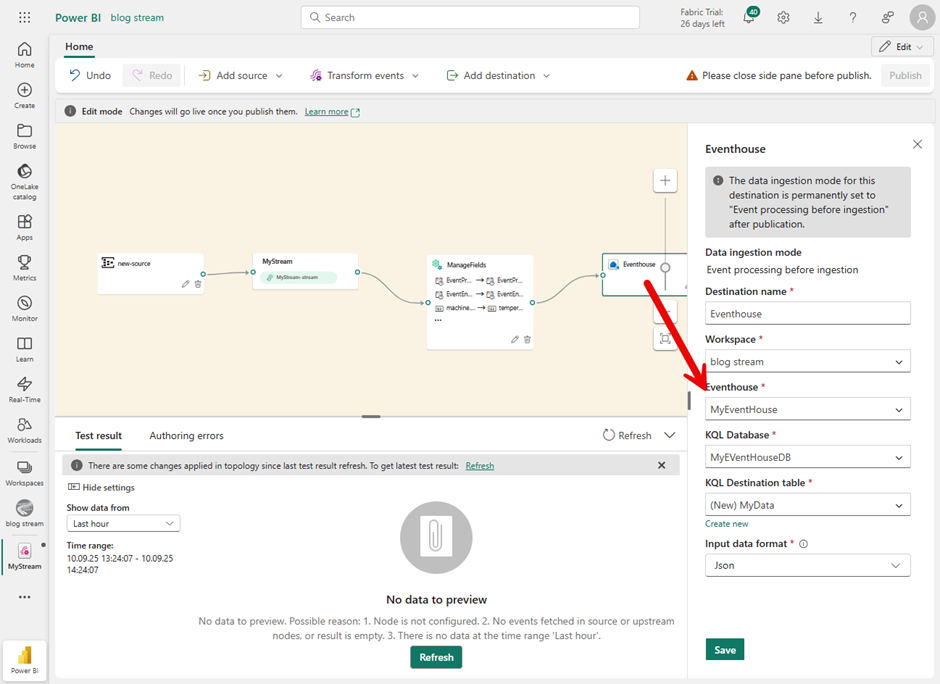

I have now created a Fabric Event Stream to capture and process the data from the Azure Event Hub within Fabric.

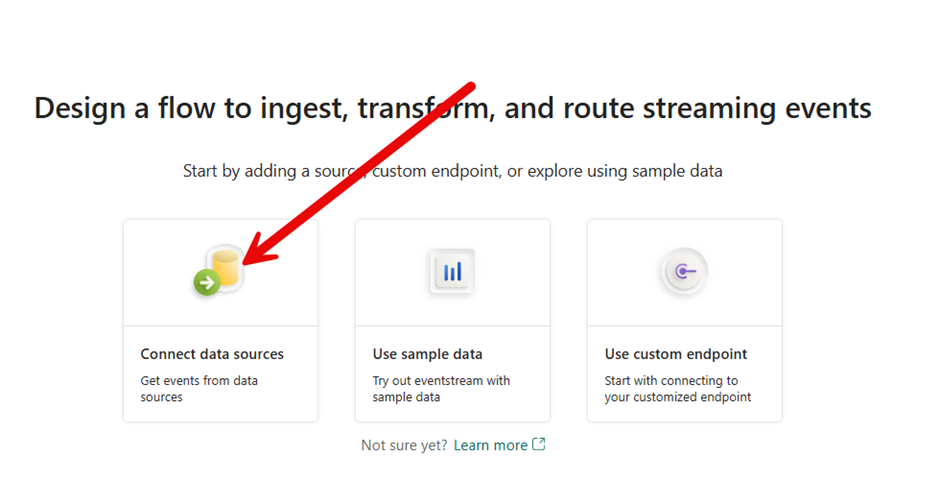

Then I created a data flow:

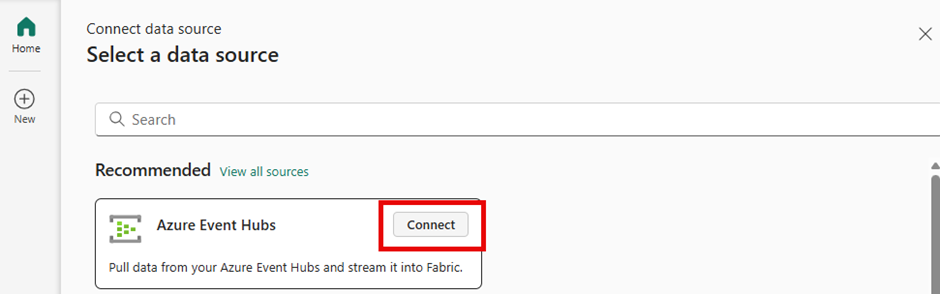

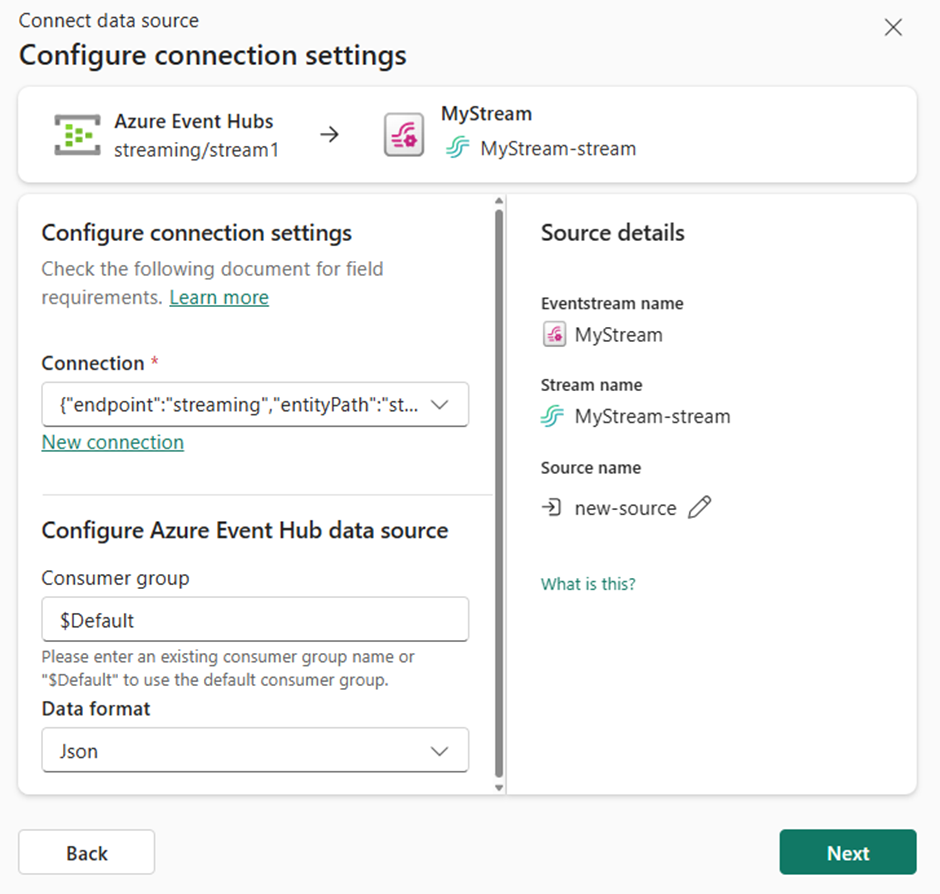

Then I connected this, for the data source, to the Azure Event Hub.

If the script is then executed regularly, you can also see the incoming data.

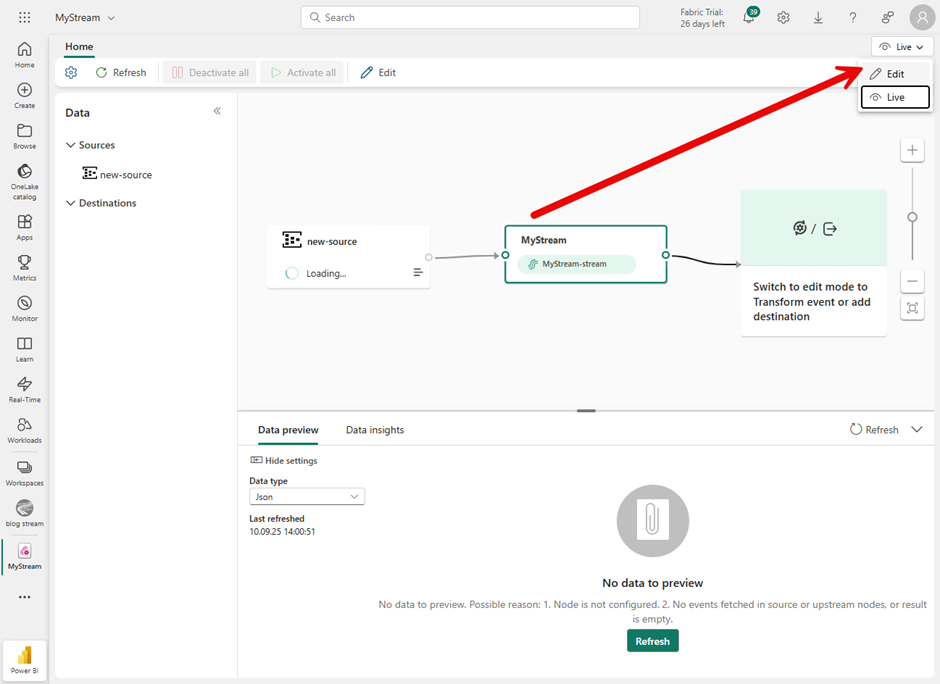

To be able to complete the next steps smoothly, it is recommended to publish this data flow. Otherwise, some functions, such as expanding hierarchies within JSON structures, will not appear fully. You will then have to switch back to edit mode.

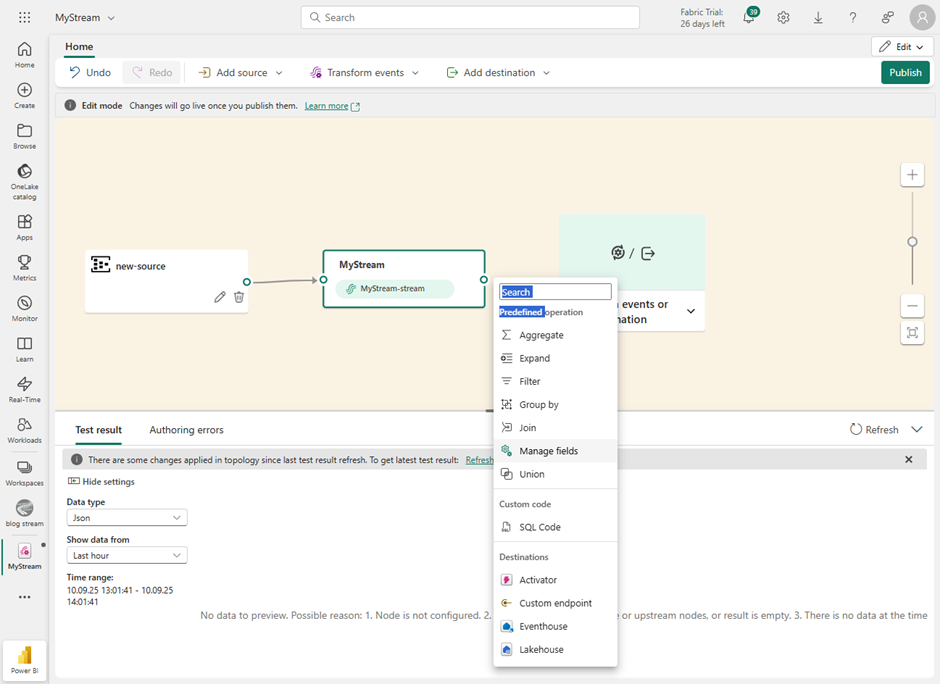

To extend the JSON, for example, it’s recommended to remove the arrow between the data and the target platform. To do this, we’ll add a task called Manage Fields, which can then be used to extend the structures.

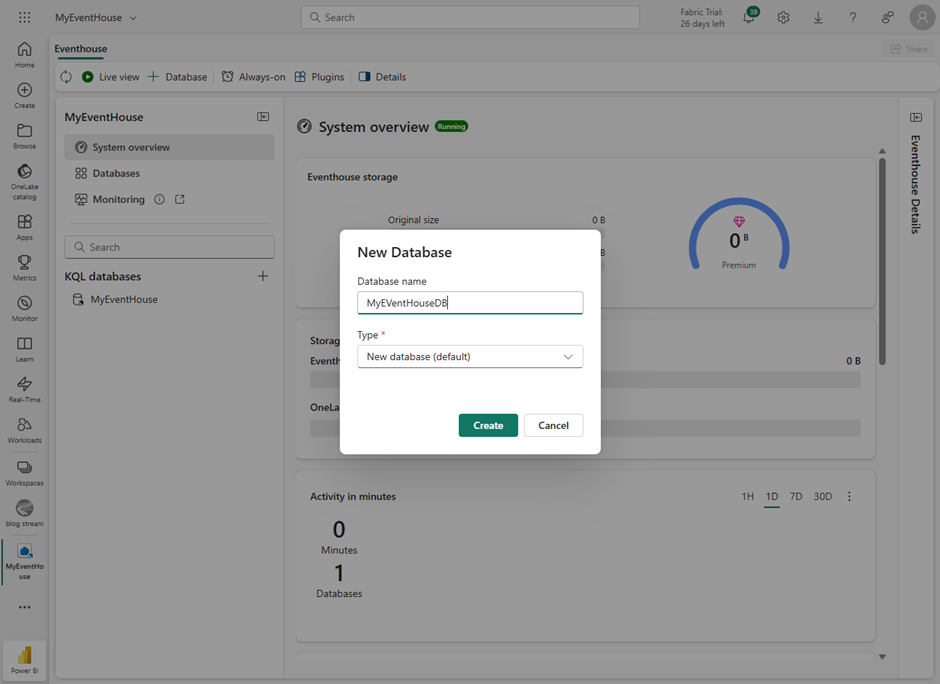

Now you can add a new field from the hierarchy structure. Finally, I added a data sink, in the form of an event house.

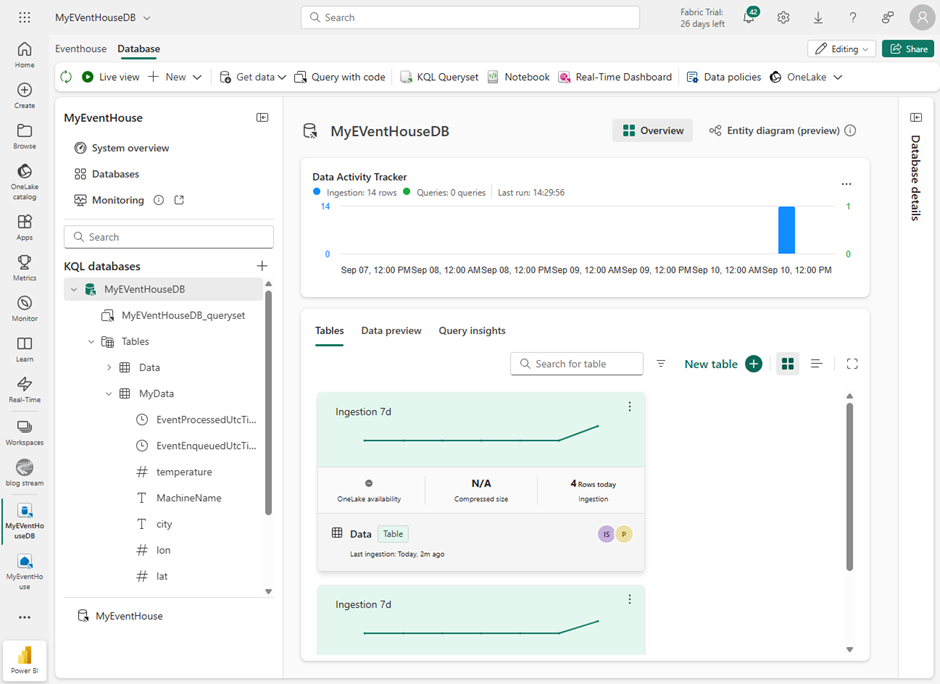

Within this data sink I then created a KQL database.

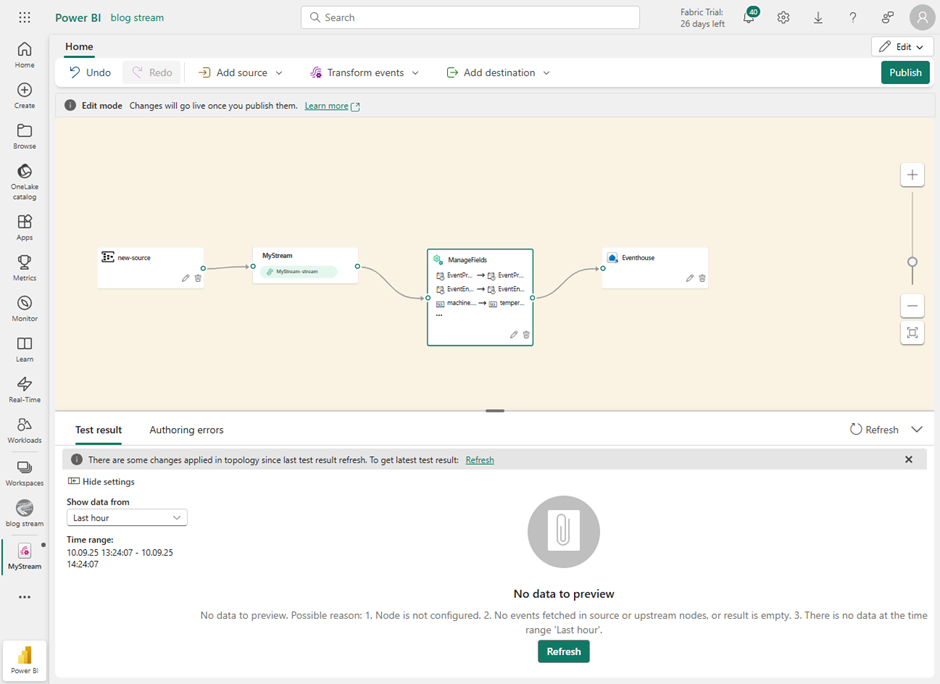

Back in the data flow, select the created data sink in the target. Since I want to store all fields in one table, I can simply create a target table.

Now it’s time to save or publish this data flow. From then on, any transferred data is stored in the target table.

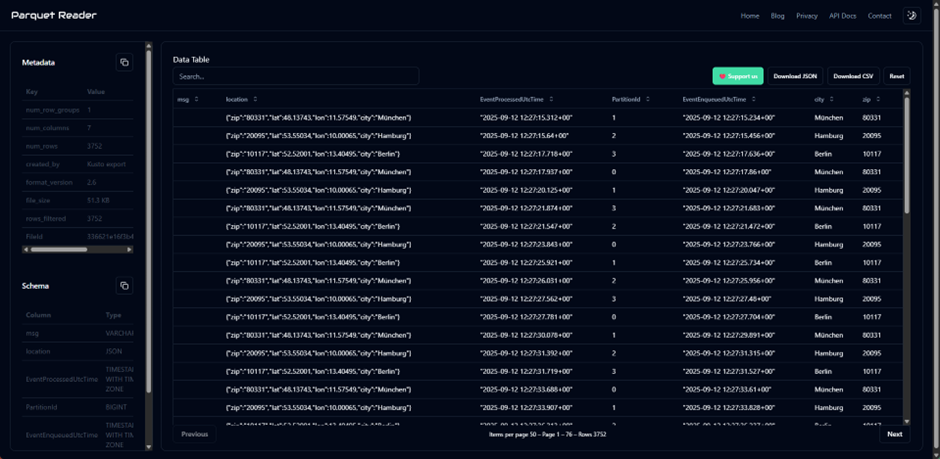

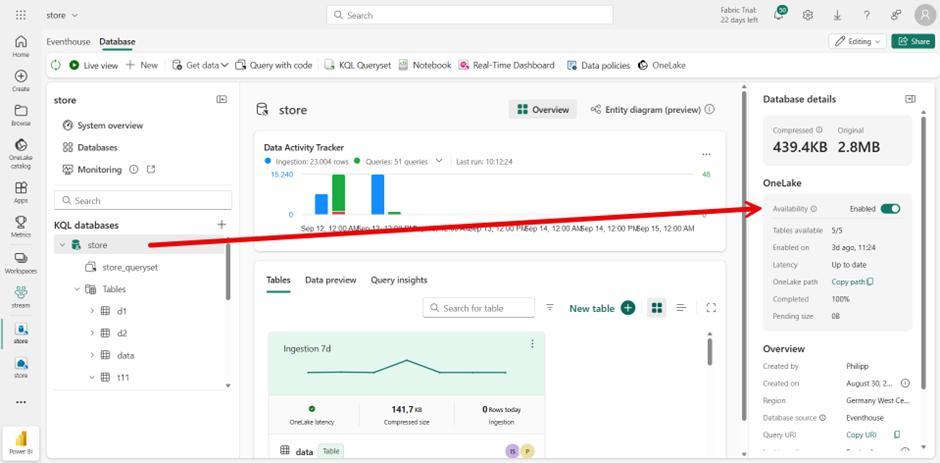

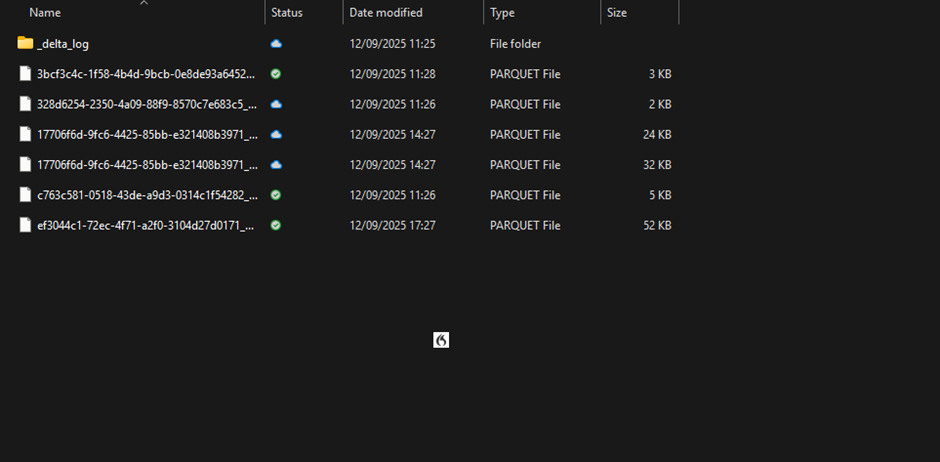

In a meeting with Frank Geisler, I learned how to save the table data in OneLake. However, it’s important to note that since these are compressed Kusto files, the files won’t be visible for one or two days.

To make the content visible, I used the free web tool:

https://parquetreader.com/