If you work with Analysis Services on Azure, it is another way to process a cube. On premises you use mostly the SQL Server Agent to do this, but this option is not given on Azure. There you can use Azure Automation, PowerShell, or a Data Factory (ADF). This post is dedicated to the ADF, because mostly you need to process a table, partition, or the whole database after you made a data intake.

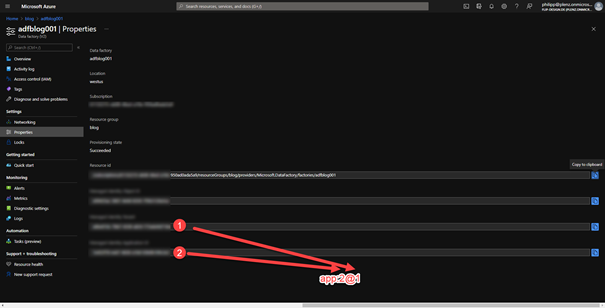

First we need to grant permissions to the SSAS Cube from the ADF. So, we need to create a managed identity. To get the needed data, open the properties of the ADF at the Azure Portal.

With the Tenant and the ADF ID, we can build the credential-

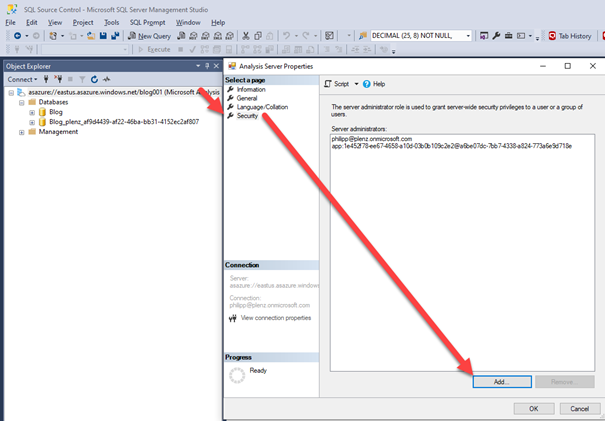

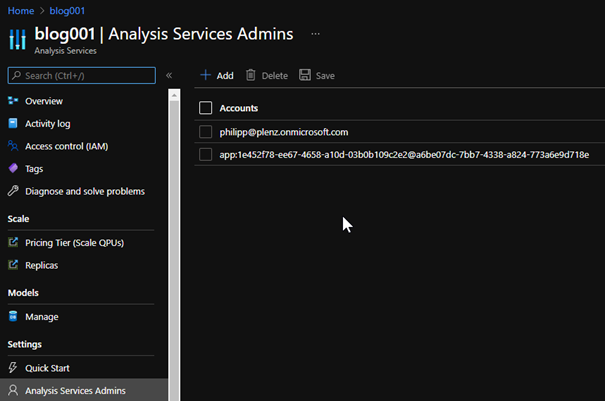

Next, connect to the AS instance via the Management Studio and go the server administrators and add this user. Via the Azure portal it is not possible, but after that, you can view the result.

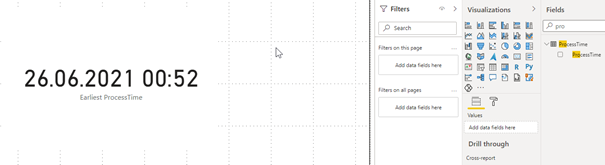

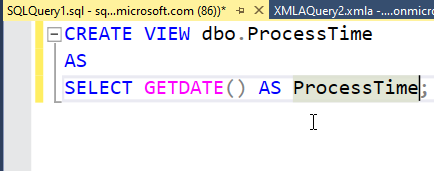

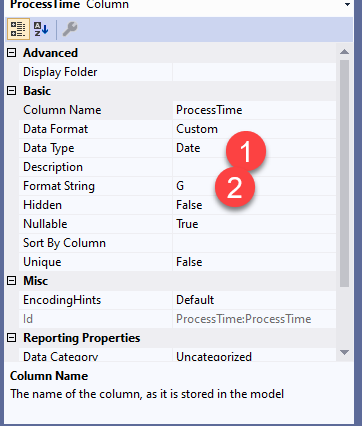

To check the result, if a refresh has worked, I have added a View to my data source and imported the data inside the cube.

You should format the column as a date type and format the column as a G, so you also get the time inside the output.

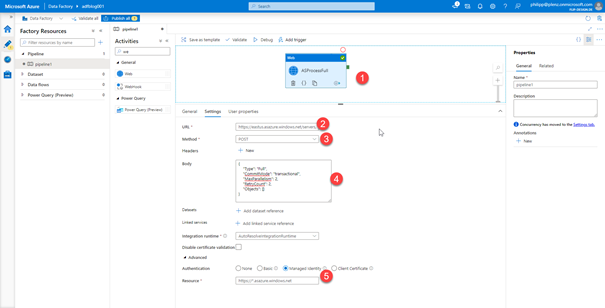

Okay, the infrastructure is ready! Now, we can add a new pipeline inside our ADF with a web activity.

This is the schema: https://<region>.asazure.windows.net/servers/<servername>/models/<modelname>/refreshes

For the fifth point add following URL for the authentication. https://*.asazure.windows.net

The following XMLA code will initiate a full process

{

„Type“: „Full“,

„CommitMode“: „transactional“,

„MaxParallelism“: 2,

„RetryCount“: 2,

„Objects“: []

}

After running the ADF pipeline you should get a full refresh of the cube: